AI goes inside Renesas MCUs, Dynamically Reconfigurable Processors

TOKYO — Every company that has pledged its faith to “smart manufacturing” is pledging its hopes for AI.

This brave new world requires a big investment in high-cost AI systems, along with the cost of setting up a “learning” platform and contacting cloud service providers. The grand plan starts with big data collection so that the machine can learn and figure out something previously unknown.

That’s the theory.

In the real world, however, many companies are finding AI hard to implement. Some blame their inexperience in AI, or a shortage of in-house data scientists cable of making the most of AI. Others complain that they have not been able to establish the proof of concept of their installed AI systems. In any case, manufacturers are beginning to realize that AI is not an “if you build it, they will come” deal.

Enter Renesas Electronics.

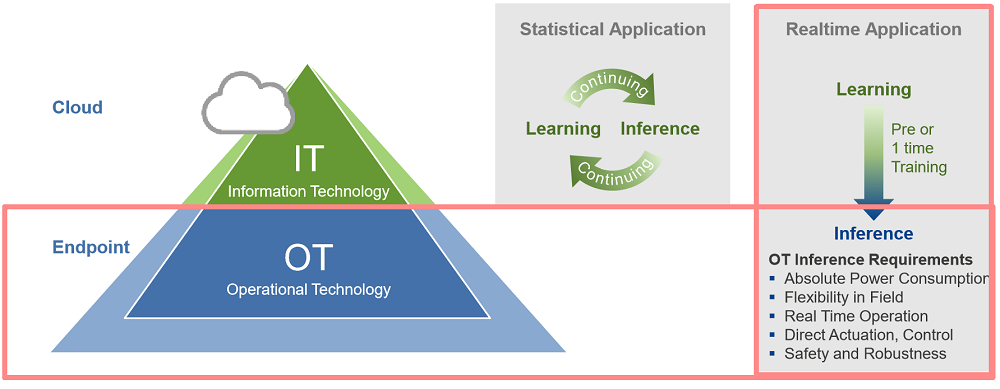

The Japanese chip company claims a leading position in the global factory automation market. It is proposing “real-time continuous AI” for the world of operational technology (OT). This approach contrasts sharply with “statistical AI,” often pitched by big data companies to promote automation in the world of information technology (IT).

Statistical AI for IT vs. continuous AI for OT (Source: Renesas)

Yoshikazu Yokota, executive vice president and general manager of industrial solution business unit at Renesas, told EE Times that embedded AI is critical for fault detection and predictive maintenance in OT. When an anomaly pops up in any given system or process, embedded AI can “make decisions locally and real time,” he explained. Renesas proposed the idea for “AI at endpoints” three years ago and started to experiment with it in its own Naka semiconductor fab.

“Our plan is to enable real-time inference in OT, while incrementally increasing AI capabilities at endpoints,” said Yokota.

Yoshikazu Yokota, executive vice president at Renesas, plans to focus on offering real-time inference in OT.

By bringing AI in baby steps to factory floors, Renesas hopes to help customers currently struggling to complete the proof of concept on their own AI implementation and understand their return of investment in AI.

When to apply AI to OT

Mitsuo Baba, senior director of the strategy and planning division of Renesas’ Industrial Solution business unit, told us that AI can be best applied to OT when specific issues — in production lines for example — are already identified.

For example, suppose there is a highly skilled operational manager who is experienced enough to detect certain anomalies in a factory. Instead of sending this manager to check out every stage of the manufacturing process, “We could use AI to draw the line — and define — when and where an abnormal situation begins to emerge during the production defects,” said Baba. AI could be the watchful eye monitoring the production line continuously, to keep small products defects from advancing to the next stage of production.

In such a factory automation example, AI needs to be trained only once based on pre-identified issues. AI inference runs on endpoint devices in real time, without returning to the cloud. Baba said 30Kbytes of data is usually enough for end-point inference, compared to statistical AI doing both learning and inference, which typically demands processing data as big as 300 megabytes in the cloud.

In short, Renesas is advocating AI inference that can be done on an MCU.

Rather than replacing existing production lines with brand-new AI enabled machines, which would be costly, Renesas is proposing an “AI Unit Solution” kit that can be attached to current production equipment.

Baba said Renesas has no plans to challenge AI chip companies like Nvidia. “Our goal is to lead a new market segment of embedded AI, in which data required for inference is so small that it can even run on existing MCU/MPU,” Baba said.